KUKA YouBot

The Art of Software Control: Simulating and Navigating the KUKA YouBot

Overview

Welcome to my robotics journey! This project revolves around unleashing the power of automation to streamline the often labour-intensive task of managing shelves in warehouses and stores. Using the KUKA youBot robot, I've transformed this challenge into an exciting adventure in robotics. My goal? To teach a machine to identify objects, pick them up, navigate through a dynamic environment, and delicately place them in their designated spots. Join me as I explore the cutting-edge realm of robotics, tackling real-world problems, one innovation at a time.

Skills Used:

Embedded Programming (Lua)

Problem-Solving

Safety-Centric Design

Computer Vision

Planning

Algorithmic Decision Making

Robot manipulation and control

Technical Specifications

CoppeliaSim software for simulation

Lua for programming

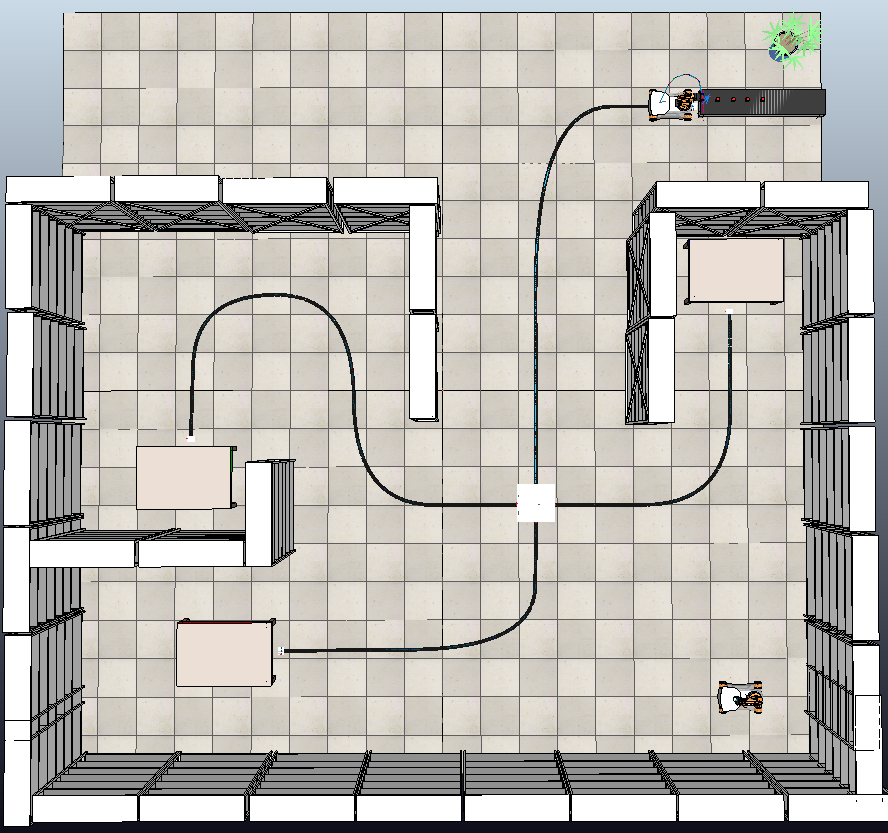

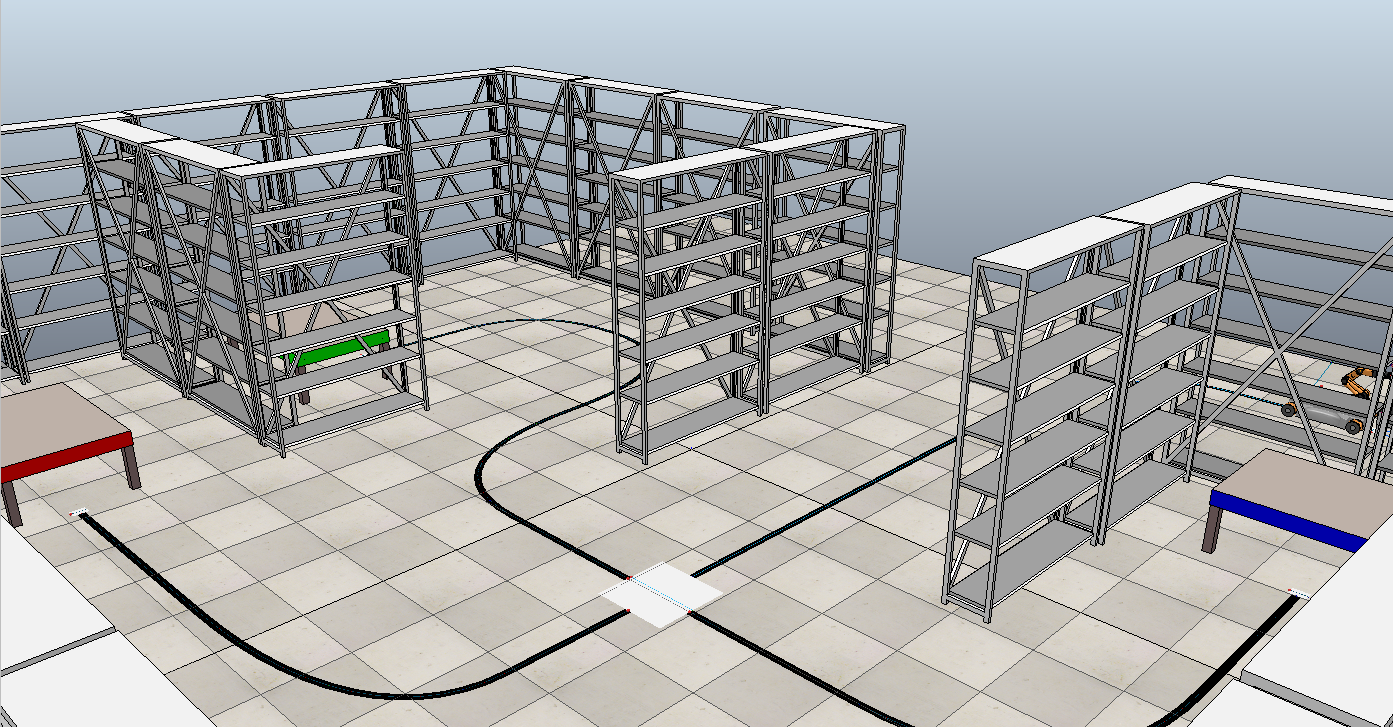

Planning and Layout

In the planning and layout phase of this robotics project, meticulous attention was paid to crafting a simulated environment that would mirror the complexities of real-world scenarios. The goal was to develop a setting that could challenge the KUKA youBot robot effectively, pushing the boundaries of its capabilities. This phase involved creating a small warehouse layout, complete with distinct paths, tables of different colours, and a conveyor belt to introduce a dynamic element. The strategic placement of sensors, including proximity sensors and vision sensors, added a layer of realism to the simulation, enabling the robot to make real-time decisions based on its surroundings. The planning and layout process set the stage for subsequent development, ensuring that the robot's interactions within the environment would be both challenging and instructive, ultimately leading to a comprehensive and enlightening robotics experience.

Sensory Integration and Navigation

The primary focus was on equipping the KUKA youBot with the sensory capabilities essential for seamless interaction within the simulated environment. To enable efficient object manipulation and navigation, four key sensors played pivotal roles in this phase.

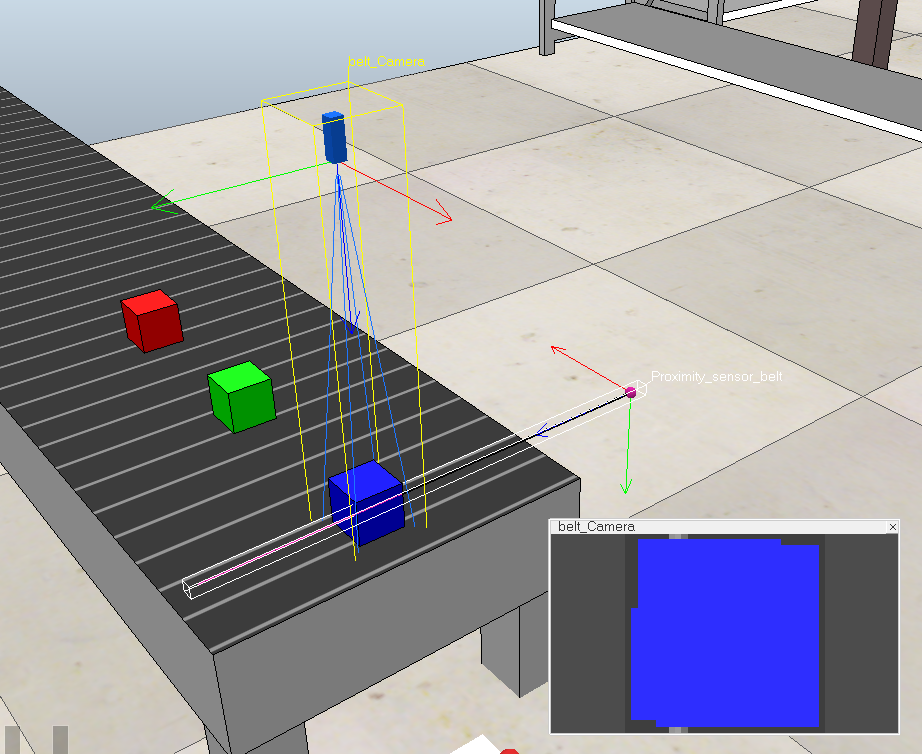

The conveyor belt was outfitted with a proximity sensor (Pink), serving as a critical element of flow control. This sensor detected the presence of objects on the belt, automatically stopping its movement to ensure precise positioning for the robot's pick-up operation. Additionally, a perspective vision sensor (Blue) was strategically positioned on the conveyor belt. This vision sensor played a fundamental role in identifying the colour of incoming objects, providing crucial input for the robot's navigation decisions.

On the robot itself, we integrated an orthographic vision sensor (Blue), mounted on the robot's front. This sensor was instrumental in reading floor patterns, enabling the robot to follow predefined paths with precision. Real-time data from the orthographic sensor informed the robot's movements, allowing it to make necessary adjustments to stay on course. Additionally, a proximity sensor (Pink), located just above the orthographic sensor, served as a vital obstacle avoidance mechanism. It prevented collisions with objects in the robot's path during navigation.

The successful integration of these sensors underscores the importance of sensor fusion and seamless data integration. By harnessing information from these sensors, our youBot navigated its surroundings, made informed decisions, and executed tasks efficiently. This phase laid the foundation for a responsive and adaptive robotic system, poised to tackle complex challenges in the simulated environment.

Challenges & Lessons Learned

Object Stability: Maintaining cube stability on the youBot's tray during transport posed an early challenge.

Precise Path Following: Achieving precision in path following, especially at varying speeds, proved to be a significant challenge.

Real-Time Decision Points: Decision points demand quick real-time data processing and instant decision-making for accurate navigation.

Innovative Problem Solving: Addressing object stability challenges requires creative solutions, such as implementing a 'sticky' approach.

Adaptive Algorithms: Achieving precise path following led to the development of adaptive algorithms to fine-tune robot movements effectively.

Real-Time Sensing: Utilizing sensors and algorithms for real-time decision-making at decision points significantly improved navigation accuracy.

Conclusion

In the world of robotics and automation, the KUKA youBot project has been a fascinating journey. It delved into the automation of warehouse tasks, hinting at a future where robots could replace manual labour.

The project's meticulous planning and innovative layout design recreated real-world scenarios in a virtual setting. By enhancing the youBot's sensory integration and navigation capabilities, it could identify, pick, place, and navigate objects with precision.

While achieving a commendable 90% success rate in the simulation, it's acknowledged that real-world challenges are far more intricate. This project reflects a deep interest in robotics and an unwavering commitment to pushing boundaries.

As robotics continues to evolve, the KUKA youBot project stands as a testament to the drive for innovation and problem-solving in this dynamic field.